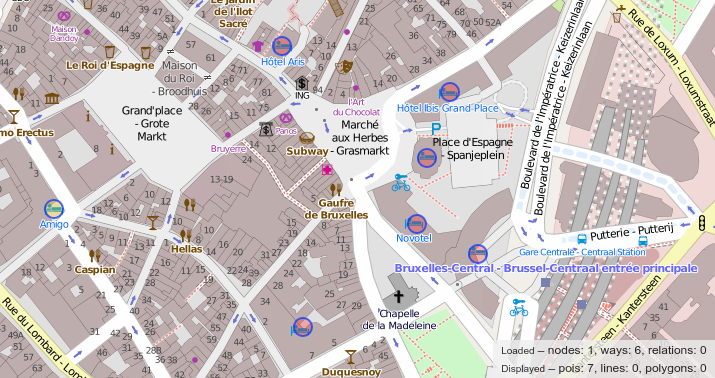

About a year ago, I started working as the maintainer of the iD editor for the OSMF. Here’s a short recap of the last year or so and a bit of outlook of what’s lying ahead according to me.

A look back

The year was characterized by the reactivation of processes which had been dormant for a little while before I started:

The tagging schema repository has received regular releases (about one major update every second month on average) after been mostly dormant in the year before. Many of the improvements to the tagging schema came in by various contributors from the community: Thanks to everyone who took part in this, including everyone actively translating on Transifex!

For iD itself, I soon learned that it is better to perform small iterative improvements, rather than tackling big reworks all at once. Therefore, my focus in the last year was primarily on stability, bug fixes and incremental improvements. There were still a few improvements to point out from last year:

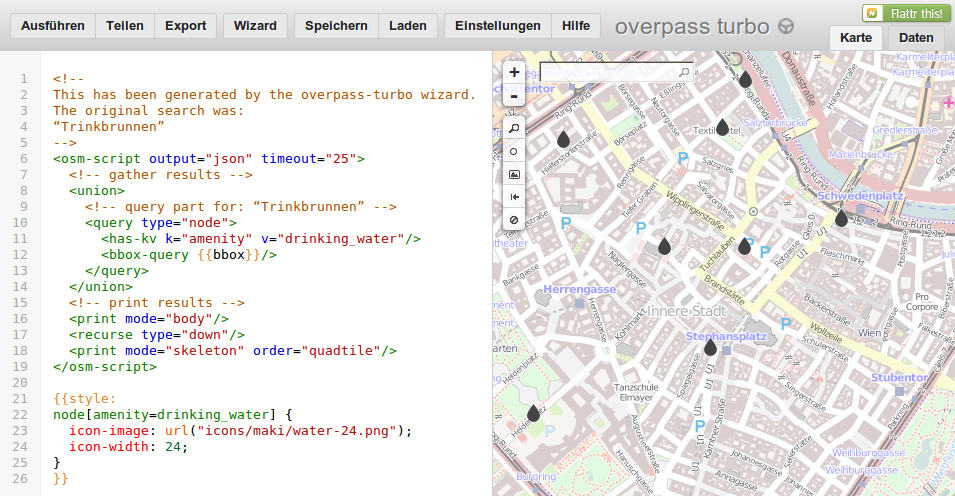

- version 2.21 switches the development build system to a more modern system based on

esbuildand droped support for Internet Explorer 11 - version 2.22 contains a first step towards making iD understand lifecycle prefixes of tags

- version 2.23 significantly improves startup times, improved input fields in various ways and introduced a few improvements under the hood to manage presets

- version 2.24 adds a remaining input length indicator for fields which are constrained to OSM tags’ maximum length of 255 characters and introduces a new field type for directional tags

A glimpse ahead

Another highlights of the year 2022 was the State of the Map conference in Firenze, where I spoke about the history of the iD editor (in summer 2023, it will celebrate 10 years of being the default editor on osm.org!), and tried to explain what the mid to long term high level goals are which I would like to tackle for iD. I identified five big topics to work on:

- iD is the first mapping experience for many contributors: it should remain friendly and easy to use a nd accessibility is an important topic where iD can still be improved

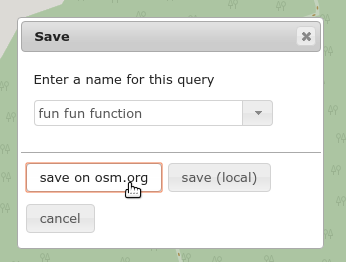

- as OSM matures, it will become more important to streamline mappers’ editing experience to their individual needs: away from a single general approach which has to work for everyone, towards a system which allows for more customization

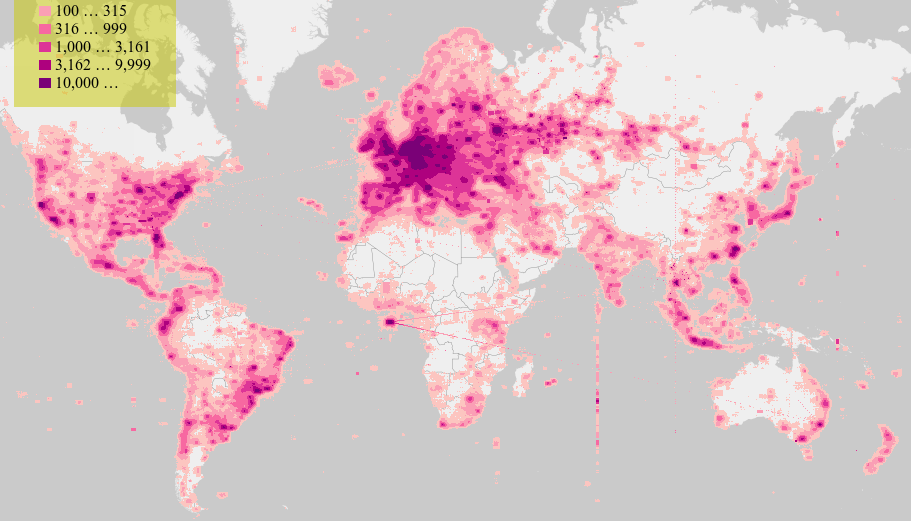

- as the amount of data in OSM grows continuously, performance bottlenecks become apparent: iD should be as responsive as technically feasible

- mapping should be a delightful experience: recurring mapping tasks should not feel cumbersome to perform in iD

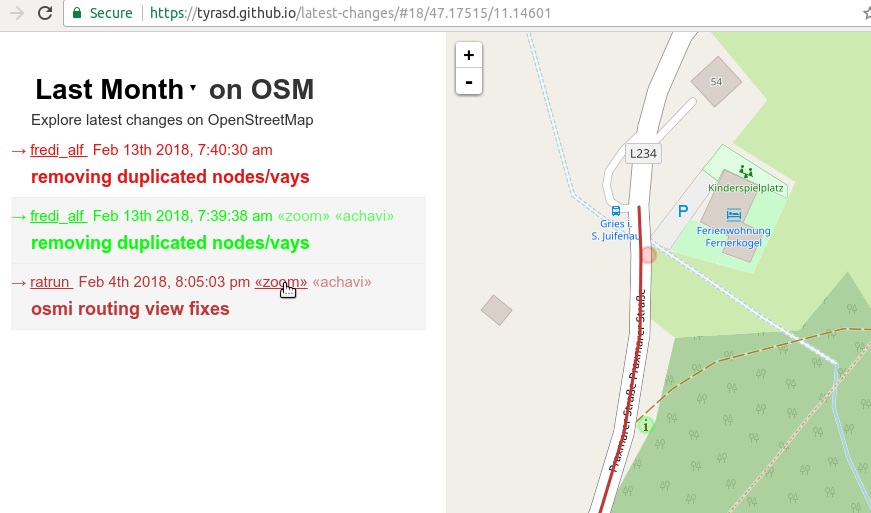

- when a region gets more and more “completely” mapped in OSM, the topic of map gardening becomes important: iD should make it easy to keep the map up to date

A next step: community gatherings

Starting this year, I would like to pick up the “tradition” of hosting regular online community sessions to chat about the iD editor. I’d like to do these on a regular interval, starting about every month and at varying times of the day.

For each of these meetings, I will pick a topic which I’d like to highlight in form of a short tutorial, hands-on session and/or presentation. My goal for this section is to share tips and tricks with you as well as hopefully trigger interesting discussions. Of course these meetups will also be a platform to ask general questions, and to give updates about current works in progress. Any other feedback is of course also very welcome!

Here are some examples of topics which could be the focus of these sessions: * reorganizing the background imagery layer widget * adding a “improve geometry” mode to iD * how to make ui fields better: iconography, grouping of … * iD’s map rendering technology stack * how localization is done in iD * lifecycle prefixes in OSM tags

Are there any further topics you are eager to discuss?

I’ve scheduled the first of these chats on February 20 at 4pm UTC. It will take place on the OSMF’s BigBlueButton server in the room https://osmvideo.cloud68.co/user/mar-ljx-3qx-58s and the first topic will be: the state of the tagging presets: recent changes & development tips.

See you soon!

Martin

PS: To everyone who has recently opened a PR or issue on the iD repository and is still waiting for my feedback: I have not forgotten about you and will do my best to get back to you as soon as possible. In any case, thank you very much for your efforts and patience!